Visual Studio Code: Remote SSH and why you should not trust it

Microsoft Visual Studio Code has become the go-to IDE, but is the Remote SSH extension really to be trusted?

You need a powerful IDE to edit your code locally and remotely via SSH. Which one are you going to choose?

I detest Microsoft with a passion, but it seems their Visual Studio Code has become the industry standard; Emacs and Vim simply don't cut it anymore. So I naively thought:

How bad can it be?

Wow, was I shocked. It seems their incompetence and penchant for bloatware and inefficiency permeates their entire business.

After the third VPS crash and forced reboot I started to have my suspicions, so I did some digging.

The first thing I noticed was a lot of node processes running from a path with vscode in it. Surprise!

So the first failsafe solution was a crontab entry:

32 5 * * * ~/kill-vscode.sh

With the content:

ps aux | grep -i .vscode-server | awk '{print $2}' | xargs sudo kill -15

But a couple of crashes more and I decided enough was enough.

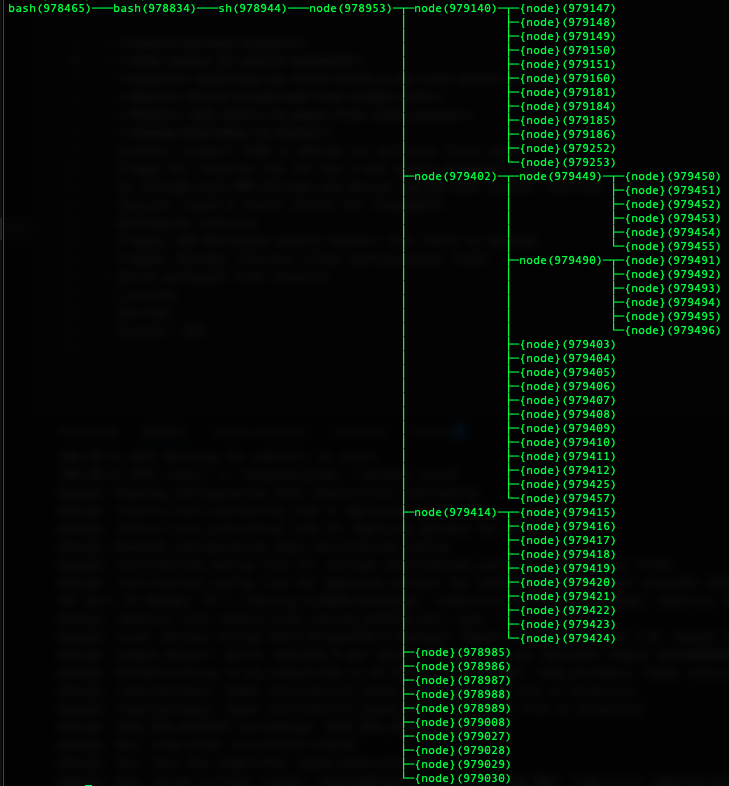

To view the full extent of the bloat go through your running shell processes during a remote connection with pstree and eventually you will find something similar to the image above:

ps aux | grep bashpstree -p 978465

The ~/.vscode-server directory on my VPS is 421MB. This included two directories with near identical size and node_module trees called bin and cli of 181MB. Why am I not surprised?

So how can I use this as just a remote editor without the bloat?

Once the remote login has a shell it starts a shell script called code-server (Visual Studio Code Server):

.vscode-server/bin/ddc367ed.../bin/code-server --start-server --server-data-dir .vscode-server --host=127.0.0.1 --accept-server-license-terms --enable-remote-auto-shutdown --port=0 --telemetry-level error --connection-token-file .vscode-server/.ddc367ed...

Which just runs a node script called server-main.js which is 1.1MB minified:

.vscode-server/bin/ddc367ed.../node .vscode-server/bin/ddc367ed.../out/server-main.js --start-server --server-data-dir .vscode-server --host=127.0.0.1 --accept-server-license-terms --enable-remote-auto-shutdown --port=0 --telemetry-level error --connection-token-file .vscode-server/.ddc367ed...

Which then starts several other node processes, which in turn start many other node processes too numerous to bother with:

.vscode-server/bin/ddc367ed.../out/bootstrap-fork --type=fileWatcher.vscode-server/bin/ddc367ed.../out/bootstrap-fork --type=extensionHost --transformURIs --useHostProxy=false.vscode-server/bin/ddc367ed.../out/bootstrap-fork --type=ptyHost --logsPath .vscode-server/data/logs/20250510T002044

And what do you think is left behind when the remote connection is closed from the IDE...

Come on, you can guess can't you? I think if there were a pub quiz involving Microsoft developers asking what free() does you would always get the same answer:

I have no idea!

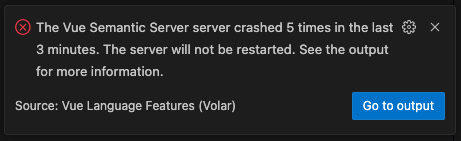

The answer of course is everything! The code-server process remains behind for as long as it wants, chewing up memory and your CPU. And I think my crash problem was that some build processes were driving the code server watch process into meltdown causing the SSH connection to drop and essentially locking me out until I forced a reboot. Either that or the process was started more than once and started fighting itself. We'll never know.

I also found a race condition bug that if your remote Linux machine doesn't return the output of uname -rsv instantaneously, Microsoft obviously assumes the machine is Windows and the connection fails trying to start Powershell.

I haven't yet found a way to disable all this cruft, but did find the default Intelli Sense cache and RAM usage settings were set ridiculously high in VSCode which may have an effect server-side (go to settings and search for Intelli): 5120MB -> 128MB and 4096MB -> 256MB

EDIT: The Solution

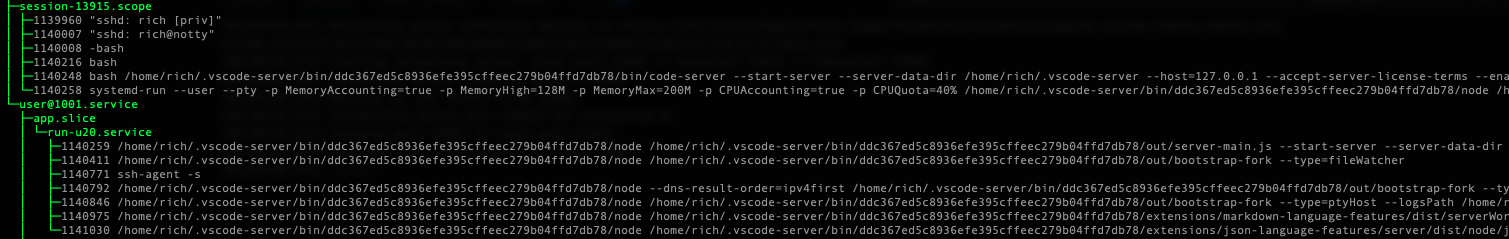

The solution I have found is to modify the code-server script found in ~/.vscode-server/bin/<your-profile-id>/bin to run the node process under systemctl-run and give it a cgroup CPU and Memory limit. Modify your script as below.

NOTE: this is for cgroup v2 and a fairly modern systemd (Ubuntu 24.04).

MEM_HIGH="128M"MEM_MAX="200M"CPU_QUOTA="40%"case "$1" in--inspect*) INSPECT="$1"; shift;;esacROOT="$(dirname "$(dirname "$(readlink -f "$0")")")"# server process commandCMD="$ROOT/node ${INSPECT:-} $ROOT/out/server-main.js $@"# use a cgroup with CPU and memory restrictionssystemd-run \--user \--pty \-p MemoryAccounting=true \-p MemoryHigh="$MEM_HIGH" \-p MemoryMax="$MEM_MAX" \-p CPUAccounting=true \-p CPUQuota="$CPU_QUOTA" \$CMD

This runs the node process essentially sandboxed in a cgroup. Although the 40% CPU limit might seem high, that is actually only on 1 CPU core, so oddly you can set it to 400% if you have 4 cores.

You can check it is working by doing:

systemctl status

You should find the node processes running in the user.slice and should see a tree like below with an app.slice.

You can then check the cgroup settings using the following commands:

more /sys/fs/cgroup/user.slice/user-1001.slice/user@1001.service/app.slice/run-u20.service/memory.maxmore /sys/fs/cgroup/user.slice/user-1001.slice/user@1001.service/app.slice/run-u20.service/memory.highmore /sys/fs/cgroup/user.slice/user-1001.slice/user@1001.service/app.slice/run-u20.service/cpu.maxsystemd-cgtop

More Info

You can also see if the cgroup limits are being hit with:

more /sys/fs/cgroup/user.slice/user-1001.slice/user@1001.service/app.slice/run-u23.service/cpu.statmore /sys/fs/cgroup/user.slice/user-1001.slice/user@1001.service/app.slice/run-u23.service/memory.events

Specifically the `nr_throttled` cpu stat and the `high` and `max` memory events.

I found that 40% CPU was too low, but 70% was reasonable.

128M memory high limit was too low but 384M was more reasonable.

Obviously the max setting must be greater than high.

Imagine that, 384MB RAM just for a text editor to run remotely with a few extensions!

The moral of the story is: yet another mass of wasted hours of my life I won't get back attempting to use a Macrosloth product.

No comments yet. Login to start a new discussion Start a new discussion